Use batch endpoints for batch scoring

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

Batch endpoints provide a convenient way to run inference over large volumes of data. They simplify the process of hosting your models for batch scoring, so you can focus on machine learning, not infrastructure. For more information, see What are Azure Machine Learning endpoints?.

Use batch endpoints when:

- You have expensive models that requires a longer time to run inference.

- You need to perform inference over large amounts of data, distributed in multiple files.

- You don't have low latency requirements.

- You can take advantage of parallelization.

In this article, you'll learn how to use batch endpoints to do batch scoring.

Tip

We suggest you to read the Scenarios sections (see the navigation bar at the left) to find more about how to use Batch Endpoints in specific scenarios including NLP, computer vision, or how to integrate them with other Azure services.

About this example

In this example, we're going to deploy a model to solve the classic MNIST ("Modified National Institute of Standards and Technology") digit recognition problem to perform batch inferencing over large amounts of data (image files). In the first section of this tutorial, we're going to create a batch deployment with a model created using Torch. Such deployment will become our default one in the endpoint. In the second half, we're going to see how we can create a second deployment using a model created with TensorFlow (Keras), test it out, and then switch the endpoint to start using the new deployment as default.

The information in this article is based on code samples contained in the azureml-examples repository. To run the commands locally without having to copy/paste YAML and other files, first clone the repo. Then, change directories to either cli/endpoints/batch/deploy-models/mnist-classifier if you're using the Azure CLI or sdk/python/endpoints/batch/deploy-models/mnist-classifier if you're using the Python SDK.

git clone https://github.com/Azure/azureml-examples --depth 1

cd azureml-examples/cli/endpoints/batch/deploy-models/mnist-classifier

Follow along in Jupyter Notebooks

You can follow along this sample in the following notebooks. In the cloned repository, open the notebook: mnist-batch.ipynb.

Prerequisites

Before following the steps in this article, make sure you have the following prerequisites:

The Azure CLI and the

mlextension to the Azure CLI. For more information, see Install, set up, and use the CLI (v2).Important

The CLI examples in this article assume that you are using the Bash (or compatible) shell. For example, from a Linux system or Windows Subsystem for Linux.

An Azure Machine Learning workspace. If you don't have one, use the steps in the Install, set up, and use the CLI (v2) to create one.

Connect to your workspace

First, let's connect to Azure Machine Learning workspace where we're going to work on.

az account set --subscription <subscription>

az configure --defaults workspace=<workspace> group=<resource-group> location=<location>

Create compute

Batch endpoints run on compute clusters. They support both Azure Machine Learning Compute clusters (AmlCompute) or Kubernetes clusters. Clusters are a shared resource so one cluster can host one or many batch deployments (along with other workloads if desired).

This article uses a compute created here named batch-cluster. Adjust as needed and reference your compute using azureml:<your-compute-name> or create one as shown.

az ml compute create -n batch-cluster --type amlcompute --min-instances 0 --max-instances 5

Note

You are not charged for compute at this point as the cluster will remain at 0 nodes until a batch endpoint is invoked and a batch scoring job is submitted. Learn more about manage and optimize cost for AmlCompute.

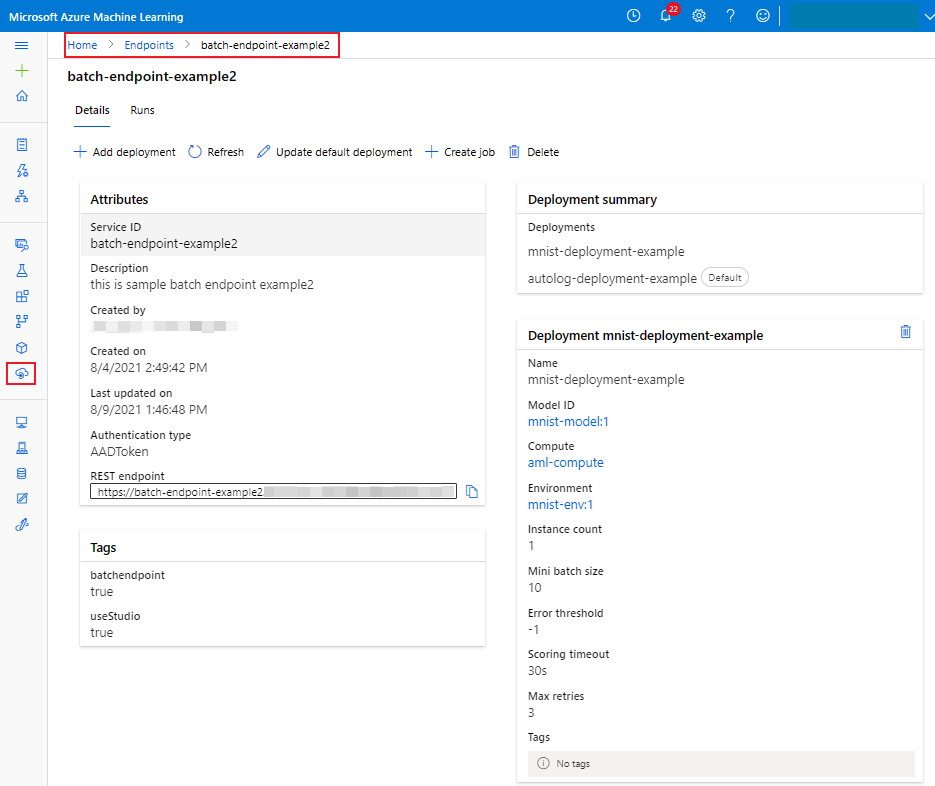

Create a batch endpoint

A batch endpoint is an HTTPS endpoint that clients can call to trigger a batch scoring job. A batch scoring job is a job that scores multiple inputs (for more, see What are batch endpoints?). A batch deployment is a set of compute resources hosting the model that does the actual batch scoring. One batch endpoint can have multiple batch deployments.

Tip

One of the batch deployments will serve as the default deployment for the endpoint. The default deployment will be used to do the actual batch scoring when the endpoint is invoked. Learn more about batch endpoints and batch deployment.

Steps

Decide on the name of the endpoint. The name of the endpoint will end-up in the URI associated with your endpoint. Because of that, batch endpoint names need to be unique within an Azure region. For example, there can be only one batch endpoint with the name

mybatchendpointinchinaeast2.Configure your batch endpoint

The following YAML file defines a batch endpoint, which you can include in the CLI command for batch endpoint creation.

endpoint.yml

$schema: https://azuremlschemas.azureedge.net/latest/batchEndpoint.schema.json name: mnist-batch description: A batch endpoint for scoring images from the MNIST dataset. auth_mode: aad_tokenThe following table describes the key properties of the endpoint. For the full batch endpoint YAML schema, see CLI (v2) batch endpoint YAML schema.

Key Description nameThe name of the batch endpoint. Needs to be unique at the Azure region level. descriptionThe description of the batch endpoint. This property is optional. auth_modeThe authentication method for the batch endpoint. Currently only Azure Active Directory token-based authentication ( aad_token) is supported.Create the endpoint:

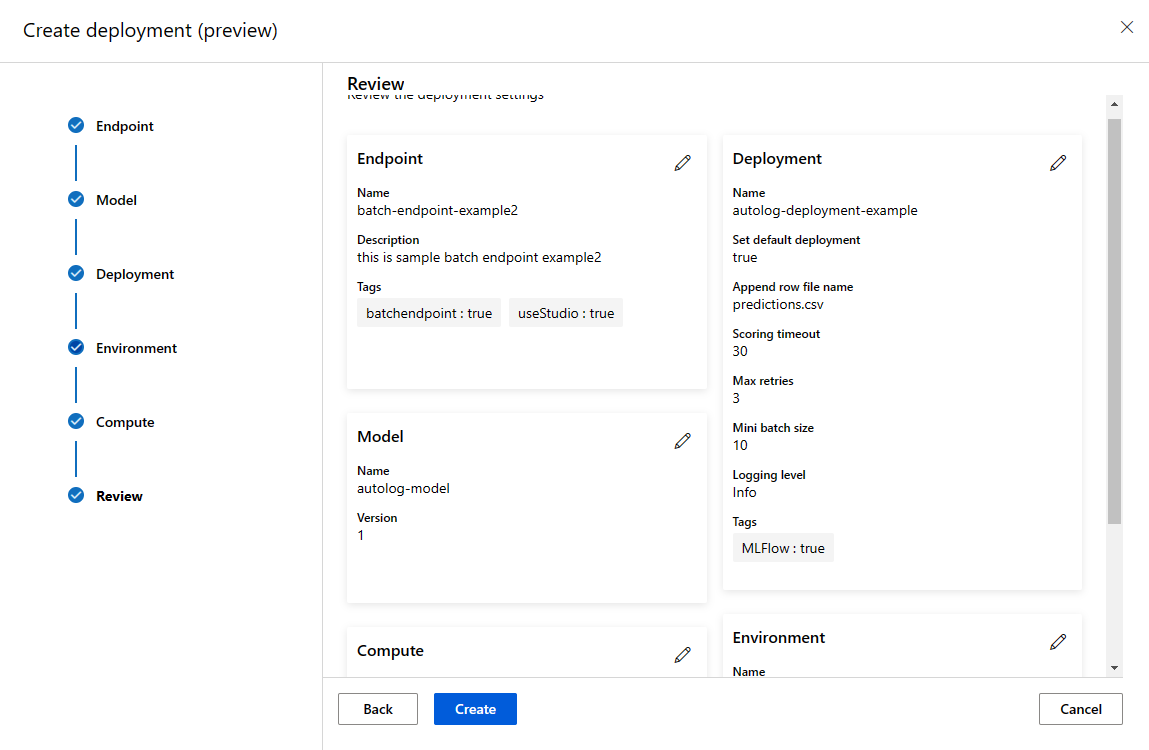

Create a batch deployment

A deployment is a set of resources required for hosting the model that does the actual inferencing. To create a batch deployment, you need all the following items:

- A registered model in the workspace.

- The code to score the model.

- The environment in which the model runs.

- The pre-created compute and resource settings.

Let's start by registering the model we want to deploy. Batch Deployments can only deploy models registered in the workspace. You can skip this step if the model you're trying to deploy is already registered. In this case, we're registering a Torch model for the popular digit recognition problem (MNIST).

Tip

Models are associated with the deployment rather than with the endpoint. This means that a single endpoint can serve different models or different model versions under the same endpoint as long as they are deployed in different deployments.

Now it's time to create a scoring script. Batch deployments require a scoring script that indicates how a given model should be executed and how input data must be processed. Batch Endpoints support scripts created in Python. In this case, we're deploying a model that reads image files representing digits and outputs the corresponding digit. The scoring script is as follows:

Note

For MLflow models, Azure Machine Learning automatically generates the scoring script, so you're not required to provide one. If your model is an MLflow model, you can skip this step. For more information about how batch endpoints work with MLflow models, see the dedicated tutorial Using MLflow models in batch deployments.

Warning

If you're deploying an Automated ML model under a batch endpoint, notice that the scoring script that Automated ML provides only works for online endpoints and is not designed for batch execution. Please see Author scoring scripts for batch deployments to learn how to create one depending on what your model does.

deployment-torch/code/batch_driver.py

import os import pandas as pd import torch import torchvision import glob from os.path import basename from mnist_classifier import MnistClassifier from typing import List def init(): global model global device # AZUREML_MODEL_DIR is an environment variable created during deployment # It is the path to the model folder model_path = os.environ["AZUREML_MODEL_DIR"] model_file = glob.glob(f"{model_path}/*/*.pt")[-1] model = MnistClassifier() model.load_state_dict(torch.load(model_file)) model.eval() device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") def run(mini_batch: List[str]) -> pd.DataFrame: print(f"Executing run method over batch of {len(mini_batch)} files.") results = [] with torch.no_grad(): for image_path in mini_batch: image_data = torchvision.io.read_image(image_path).float() batch_data = image_data.expand(1, -1, -1, -1) input = batch_data.to(device) # perform inference predict_logits = model(input) # Compute probabilities, classes and labels predictions = torch.nn.Softmax(dim=-1)(predict_logits) predicted_prob, predicted_class = torch.max(predictions, axis=-1) results.append( { "file": basename(image_path), "class": predicted_class.numpy()[0], "probability": predicted_prob.numpy()[0], } ) return pd.DataFrame(results)Create an environment where your batch deployment will run. Such environment needs to include the packages

azureml-coreandazureml-dataset-runtime[fuse], which are required by batch endpoints, plus any dependency your code requires for running. In this case, the dependencies have been captured in aconda.yml:deployment-torch/environment/conda.yml

name: mnist-env channels: - conda-forge dependencies: - python=3.8.5 - pip<22.0 - pip: - torch==1.13.0 - torchvision==0.14.0 - pytorch-lightning - pandas - azureml-core - azureml-dataset-runtime[fuse]Important

The packages

azureml-coreandazureml-dataset-runtime[fuse]are required by batch deployments and should be included in the environment dependencies.Indicate the environment as follows:

The environment definition will be included in the deployment definition itself as an anonymous environment. You'll see in the following lines in the deployment:

environment: name: batch-torch-py38 image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest conda_file: environment/conda.yamlWarning

Curated environments are not supported in batch deployments. You will need to indicate your own environment. You can always use the base image of a curated environment as yours to simplify the process.

Create a deployment definition

deployment-torch/deployment.yml

$schema: https://azuremlschemas.azureedge.net/latest/batchDeployment.schema.json name: mnist-torch-dpl description: A deployment using Torch to solve the MNIST classification dataset. endpoint_name: mnist-batch model: name: mnist-classifier-torch path: model code_configuration: code: code scoring_script: batch_driver.py environment: name: batch-torch-py38 image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest conda_file: environment/conda.yaml compute: azureml:batch-cluster resources: instance_count: 1 max_concurrency_per_instance: 2 mini_batch_size: 10 output_action: append_row output_file_name: predictions.csv retry_settings: max_retries: 3 timeout: 30 error_threshold: -1 logging_level: infoFor the full batch deployment YAML schema, see CLI (v2) batch deployment YAML schema.

Key Description nameThe name of the deployment. endpoint_nameThe name of the endpoint to create the deployment under. modelThe model to be used for batch scoring. The example defines a model inline using path. Model files will be automatically uploaded and registered with an autogenerated name and version. Follow the Model schema for more options. As a best practice for production scenarios, you should create the model separately and reference it here. To reference an existing model, use theazureml:<model-name>:<model-version>syntax.code_configuration.code.pathThe local directory that contains all the Python source code to score the model. code_configuration.scoring_scriptThe Python file in the above directory. This file must have an init()function and arun()function. Use theinit()function for any costly or common preparation (for example, load the model in memory).init()will be called only once at beginning of process. Userun(mini_batch)to score each entry; the value ofmini_batchis a list of file paths. Therun()function should return a pandas DataFrame or an array. Each returned element indicates one successful run of input element in themini_batch. For more information on how to author scoring script, see Understanding the scoring script.environmentThe environment to score the model. The example defines an environment inline using conda_fileandimage. Theconda_filedependencies will be installed on top of theimage. The environment will be automatically registered with an autogenerated name and version. Follow the Environment schema for more options. As a best practice for production scenarios, you should create the environment separately and reference it here. To reference an existing environment, use theazureml:<environment-name>:<environment-version>syntax.computeThe compute to run batch scoring. The example uses the batch-clustercreated at the beginning and references it usingazureml:<compute-name>syntax.resources.instance_countThe number of instances to be used for each batch scoring job. max_concurrency_per_instance[Optional] The maximum number of parallel scoring_scriptruns per instance.mini_batch_size[Optional] The number of files the scoring_scriptcan process in onerun()call.output_action[Optional] How the output should be organized in the output file. append_rowwill merge allrun()returned output results into one single file namedoutput_file_name.summary_onlywon't merge the output results and only calculateerror_threshold.output_file_name[Optional] The name of the batch scoring output file for append_rowoutput_action.retry_settings.max_retries[Optional] The number of max tries for a failed scoring_scriptrun().retry_settings.timeout[Optional] The timeout in seconds for a scoring_scriptrun()for scoring a mini batch.error_threshold[Optional] The number of input file scoring failures that should be ignored. If the error count for the entire input goes above this value, the batch scoring job will be terminated. The example uses -1, which indicates that any number of failures is allowed without terminating the batch scoring job.logging_level[Optional] Log verbosity. Values in increasing verbosity are: WARNING, INFO, and DEBUG. Create the deployment:

Run the following code to create a batch deployment under the batch endpoint and set it as the default deployment.

az ml batch-deployment create --file endpoints/batch/mnist-torch-deployment.yml --endpoint-name $ENDPOINT_NAME --set-defaultTip

The

--set-defaultparameter sets the newly created deployment as the default deployment of the endpoint. It's a convenient way to create a new default deployment of the endpoint, especially for the first deployment creation. As a best practice for production scenarios, you may want to create a new deployment without setting it as default, verify it, and update the default deployment later. For more information, see the Deploy a new model section.Check batch endpoint and deployment details.

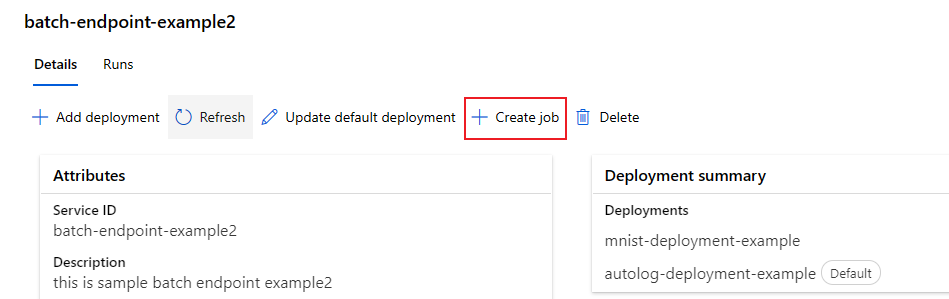

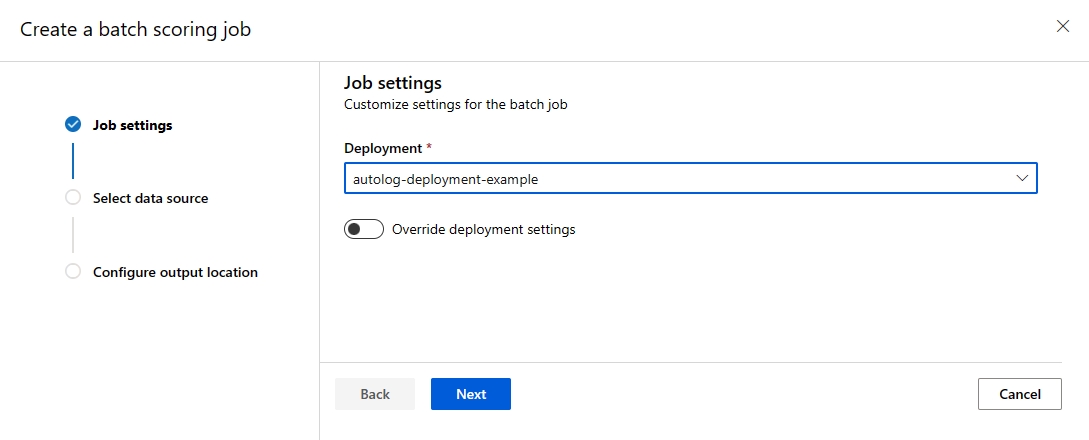

Run batch endpoints and access results

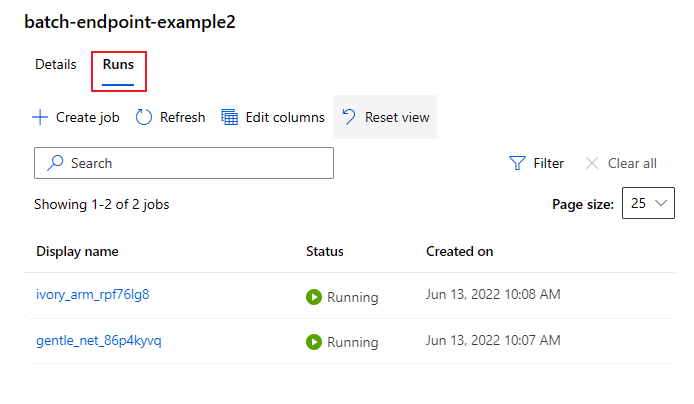

Invoking a batch endpoint triggers a batch scoring job. A job name will be returned from the invoke response and can be used to track the batch scoring progress.

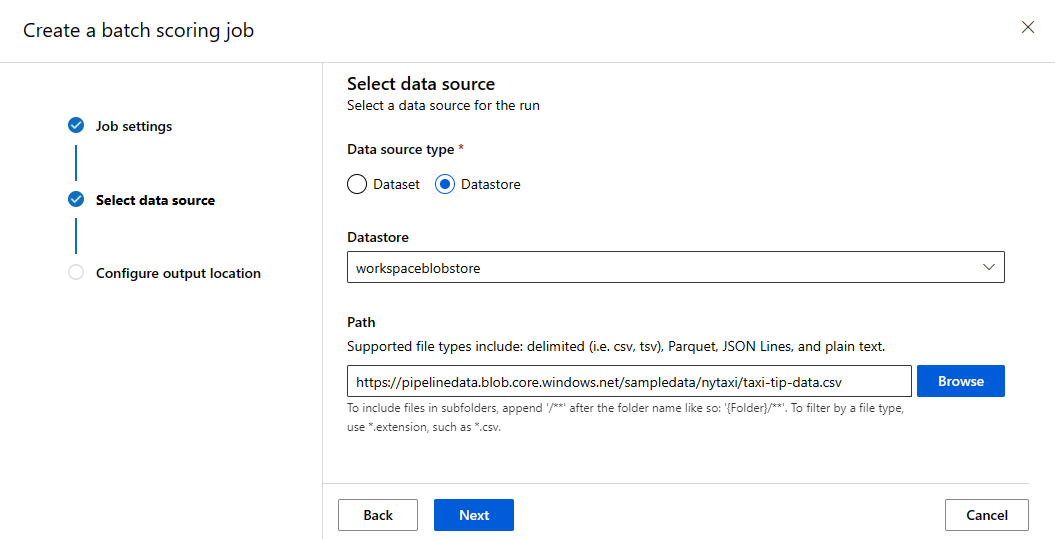

When running models for scoring in Batch Endpoints, you need to indicate the input data path where the endpoints should look for the data you want to score. The following example shows how to start a new job over a sample data of the MNIST dataset stored in an Azure Storage Account:

Note

How does parallelization work?:

Batch deployments distribute work at the file level, which means that a folder containing 100 files with mini-batches of 10 files will generate 10 batches of 10 files each. Notice that this will happen regardless of the size of the files involved. If your files are too big to be processed in large mini-batches we suggest to either split the files in smaller files to achieve a higher level of parallelism or to decrease the number of files per mini-batch. At this moment, batch deployment can't account for skews in the file's size distribution.

JOB_NAME=$(az ml batch-endpoint invoke --name $ENDPOINT_NAME --input https://pipelinedata.blob.core.windows.net/sampledata/mnist --input-type uri_folder --query name -o tsv)

Batch endpoints support reading files or folders that are located in different locations. To learn more about how the supported types and how to specify them read Accessing data from batch endpoints jobs.

Tip

Local data folders/files can be used when executing batch endpoints from the Azure Machine Learning CLI or Azure Machine Learning SDK for Python. However, that operation will result in the local data to be uploaded to the default Azure Machine Learning Data Store of the workspace you are working on.

Important

Deprecation notice: Datasets of type FileDataset (V1) are deprecated and will be retired in the future. Existing batch endpoints relying on this functionality will continue to work but batch endpoints created with GA CLIv2 (2.4.0 and newer) or GA REST API (2022-05-01 and newer) will not support V1 dataset.

Monitor batch job execution progress

Batch scoring jobs usually take some time to process the entire set of inputs.

You can use CLI job show to view the job. Run the following code to check job status from the previous endpoint invoke. To learn more about job commands, run az ml job -h.

STATUS=$(az ml job show -n $JOB_NAME --query status -o tsv)

echo $STATUS

if [[ $STATUS == "Completed" ]]

then

echo "Job completed"

elif [[ $STATUS == "Failed" ]]

then

echo "Job failed"

exit 1

else

echo "Job status not failed or completed"

exit 2

fi

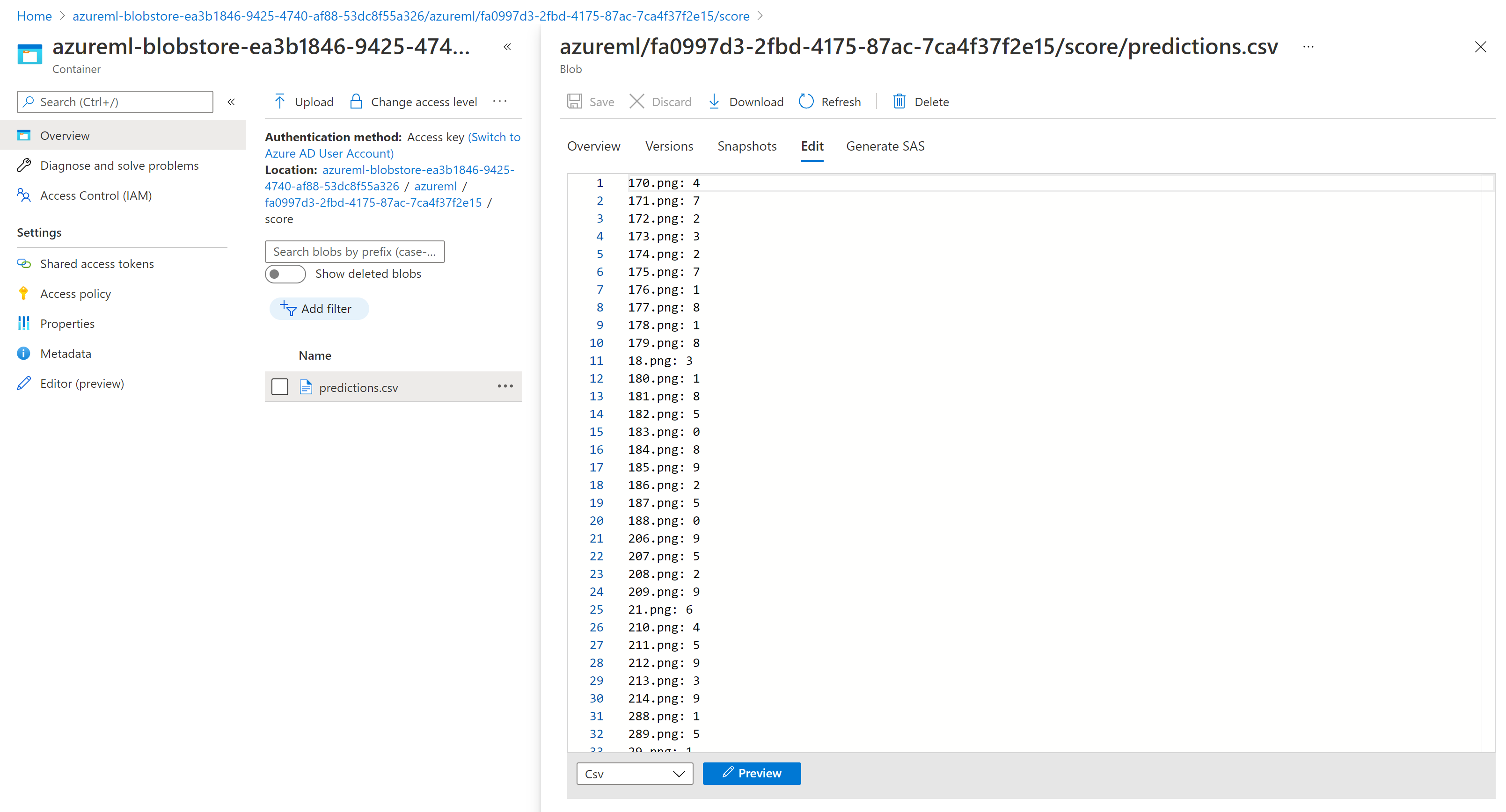

Check batch scoring results

The job outputs will be stored in cloud storage, either in the workspace's default blob storage, or the storage you specified. See Configure the output location to know how to change the defaults. Follow the following steps to view the scoring results in Azure Storage Explorer when the job is completed:

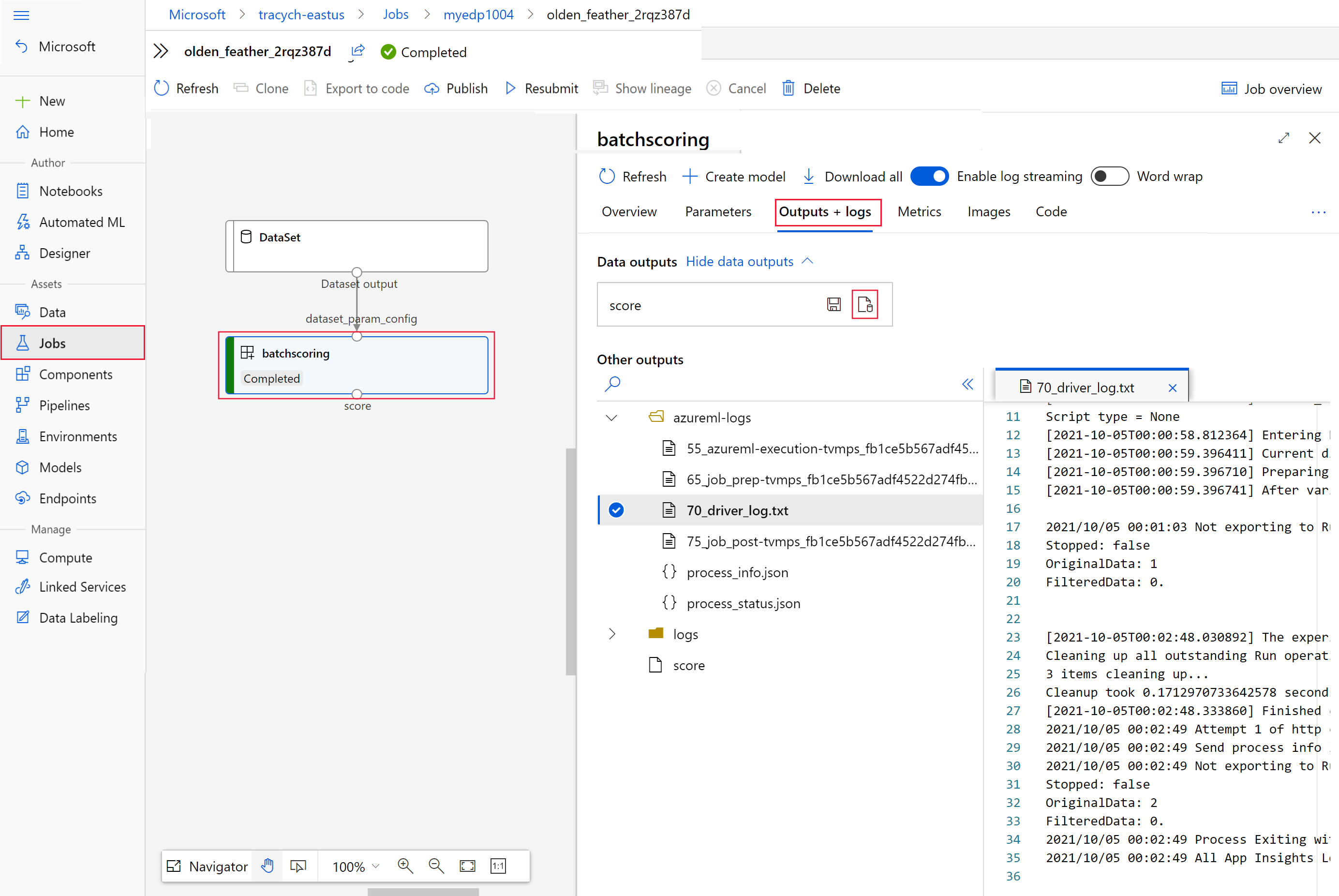

Run the following code to open batch scoring job in Azure Machine Learning studio. The job studio link is also included in the response of

invoke, as the value ofinteractionEndpoints.Studio.endpoint.az ml job show -n $JOB_NAME --webIn the graph of the job, select the

batchscoringstep.Select the Outputs + logs tab and then select Show data outputs.

From Data outputs, select the icon to open Storage Explorer.

The scoring results in Storage Explorer are similar to the following sample page:

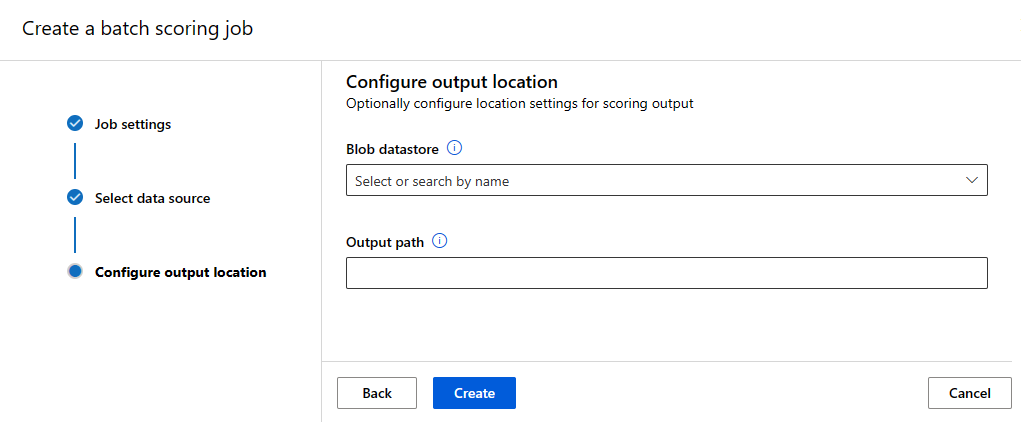

Configure the output location

The batch scoring results are by default stored in the workspace's default blob store within a folder named by job name (a system-generated GUID). You can configure where to store the scoring outputs when you invoke the batch endpoint.

Use output-path to configure any folder in an Azure Machine Learning registered datastore. The syntax for the --output-path is the same as --input when you're specifying a folder, that is, azureml://datastores/<datastore-name>/paths/<path-on-datastore>/. Use --set output_file_name=<your-file-name> to configure a new output file name.

export OUTPUT_FILE_NAME=predictions_`echo $RANDOM`.csv

JOB_NAME=$(az ml batch-endpoint invoke --name $ENDPOINT_NAME --input https://pipelinedata.blob.core.windows.net/sampledata/mnist --input-type uri_folder --output-path azureml://datastores/workspaceblobstore/paths/$ENDPOINT_NAME --set output_file_name=$OUTPUT_FILE_NAME --query name -o tsv)

Warning

You must use a unique output location. If the output file exists, the batch scoring job will fail.

Important

As opposite as for inputs, only Azure Machine Learning data stores running on blob storage accounts are supported for outputs.

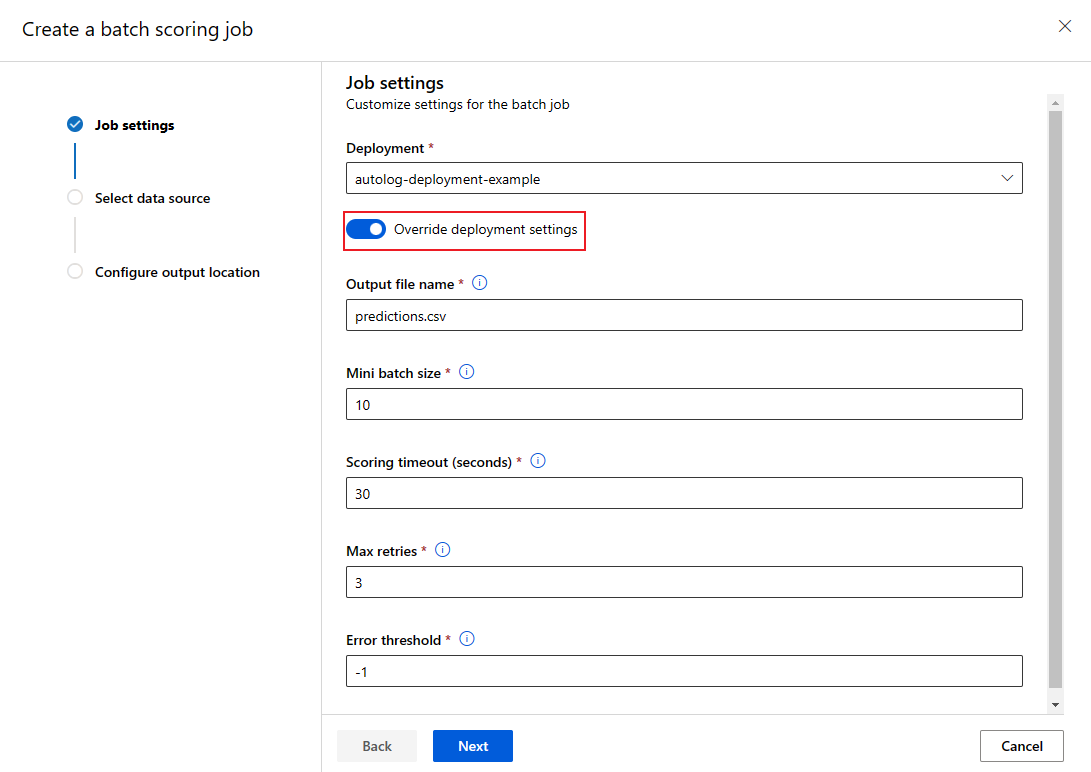

Overwrite deployment configuration per each job

Some settings can be overwritten when invoke to make best use of the compute resources and to improve performance. The following settings can be configured in a per-job basis:

- Use instance count to overwrite the number of instances to request from the compute cluster. For example, for larger volume of data inputs, you may want to use more instances to speed up the end to end batch scoring.

- Use mini-batch size to overwrite the number of files to include on each mini-batch. The number of mini batches is decided by total input file counts and mini_batch_size. Smaller mini_batch_size generates more mini batches. Mini batches can be run in parallel, but there might be extra scheduling and invocation overhead.

- Other settings can be overwritten other settings including max retries, timeout, and error threshold. These settings might impact the end to end batch scoring time for different workloads.

export OUTPUT_FILE_NAME=predictions_`echo $RANDOM`.csv

JOB_NAME=$(az ml batch-endpoint invoke --name $ENDPOINT_NAME --input https://pipelinedata.blob.core.windows.net/sampledata/mnist --input-type uri_folder --mini-batch-size 20 --instance-count 5 --query name -o tsv)

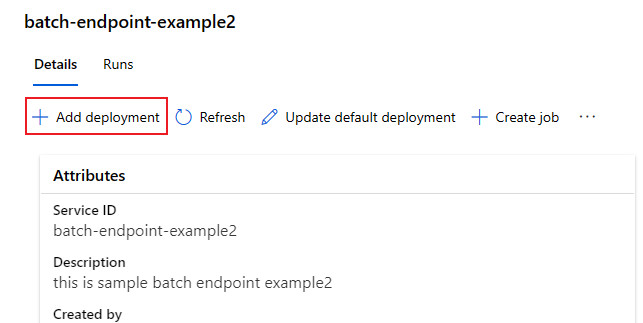

Adding deployments to an endpoint

Once you have a batch endpoint with a deployment, you can continue to refine your model and add new deployments. Batch endpoints will continue serving the default deployment while you develop and deploy new models under the same endpoint. Deployments can't affect one to another.

In this example, you'll learn how to add a second deployment that solves the same MNIST problem but using a model built with Keras and TensorFlow.

Adding a second deployment

Create an environment where your batch deployment will run. Include in the environment any dependency your code requires for running. You'll also need to add the library

azureml-coreas it is required for batch deployments to work. The following environment definition has the required libraries to run a model with TensorFlow.The environment definition will be included in the deployment definition itself as an anonymous environment. You'll see in the following lines in the deployment:

environment: name: batch-tensorflow-py38 image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest conda_file: environment/conda.yamlThe conda file used looks as follows:

deployment-keras/environment/conda.yml

name: tensorflow-env channels: - conda-forge dependencies: - python=3.8.5 - pip - pip: - pandas - tensorflow - pillow - azureml-core - azureml-dataset-runtime[fuse]Create a scoring script for the model:

deployment-keras/code/batch_driver.py

import os import numpy as np import pandas as pd import tensorflow as tf from typing import List from os.path import basename from PIL import Image from tensorflow.keras.models import load_model def init(): global model # AZUREML_MODEL_DIR is an environment variable created during deployment model_path = os.path.join(os.environ["AZUREML_MODEL_DIR"], "model") # load the model model = load_model(model_path) def run(mini_batch: List[str]) -> pd.DataFrame: print(f"Executing run method over batch of {len(mini_batch)} files.") results = [] for image_path in mini_batch: data = Image.open(image_path) data = np.array(data) data_batch = tf.expand_dims(data, axis=0) # perform inference pred = model.predict(data_batch) # Compute probabilities, classes and labels pred_prob = tf.math.reduce_max(tf.math.softmax(pred, axis=-1)).numpy() pred_class = tf.math.argmax(pred, axis=-1).numpy() results.append( { "file": basename(image_path), "class": pred_class[0], "probability": pred_prob, } ) return pd.DataFrame(results)Create a deployment definition

deployment-keras/deployment.yml

$schema: https://azuremlschemas.azureedge.net/latest/batchDeployment.schema.json name: mnist-keras-dpl description: A deployment using Keras with TensorFlow to solve the MNIST classification dataset. endpoint_name: mnist-batch model: name: mnist-classifier-keras path: model code_configuration: code: code scoring_script: batch_driver.py environment: name: batch-tensorflow-py38 image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest conda_file: environment/conda.yaml compute: azureml:batch-cluster resources: instance_count: 1 max_concurrency_per_instance: 2 mini_batch_size: 10 output_action: append_row output_file_name: predictions.csvCreate the deployment:

Run the following code to create a batch deployment under the batch endpoint and set it as the default deployment.

az ml batch-deployment create --file endpoints/batch/mnist-keras-deployment.yml --endpoint-name $ENDPOINT_NAMETip

The

--set-defaultparameter is missing in this case. As a best practice for production scenarios, you may want to create a new deployment without setting it as default, verify it, and update the default deployment later.

Test a non-default batch deployment

To test the new non-default deployment, you'll need to know the name of the deployment you want to run.

DEPLOYMENT_NAME="mnist-keras-dpl"

JOB_NAME=$(az ml batch-endpoint invoke --name $ENDPOINT_NAME --deployment-name $DEPLOYMENT_NAME --input https://pipelinedata.blob.core.chinacloudapi.cn/sampledata/mnist --input-type uri_folder --query name -o tsv)

Notice --deployment-name is used to specify the deployment we want to execute. This parameter allows you to invoke a non-default deployment, and it will not update the default deployment of the batch endpoint.

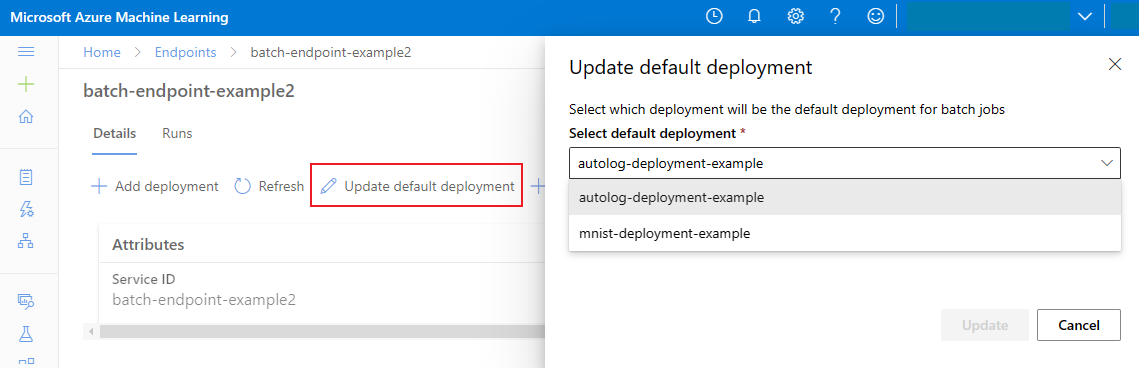

Update the default batch deployment

Although you can invoke a specific deployment inside of an endpoint, you'll usually want to invoke the endpoint itself and let the endpoint decide which deployment to use. Such deployment is named the "default" deployment. This gives you the possibility of changing the default deployment and hence changing the model serving the deployment without changing the contract with the user invoking the endpoint. Use the following instruction to update the default deployment:

az ml batch-endpoint update --name $ENDPOINT_NAME --set defaults.deployment_name=$DEPLOYMENT_NAME

Delete the batch endpoint and the deployment

If you aren't going to use the old batch deployment, you should delete it by running the following code. --yes is used to confirm the deletion.

az ml batch-deployment delete --name nonmlflowdp --endpoint-name $ENDPOINT_NAME --yes

Run the following code to delete the batch endpoint and all the underlying deployments. Batch scoring jobs won't be deleted.

az ml batch-endpoint delete --name $ENDPOINT_NAME --yes