How to deploy a registered R model to an online (real time) endpoint

APPLIES TO:  Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

In this article, you'll learn how to deploy an R model to a managed endpoint (Web API) so that your application can score new data against the model in near real-time.

Prerequisites

- An Azure Machine Learning workspace.

- Azure CLI and ml extension installed. Or use a compute instance in your workspace, which has the CLI pre-installed.

- At least one custom environment associated with your workspace. Create an R environment, or any other custom environment if you don't have one.

- An understanding of the R

plumberpackage - A model that you've trained and packaged with

crate, and registered into your workspace

Create a folder with this structure

Create this folder structure for your project:

📂 r-deploy-azureml

├─📂 docker-context

│ ├─ Dockerfile

│ └─ start_plumber.R

├─📂 src

│ └─ plumber.R

├─ deployment.yml

├─ endpoint.yml

The contents of each of these files is shown and explained in this article.

Dockerfile

This is the file that defines the container environment. You'll also define the installation of any additional R packages here.

A sample Dockerfile will look like this:

# REQUIRED: Begin with the latest R container with plumber

FROM rstudio/plumber:latest

# REQUIRED: Install carrier package to be able to use the crated model (whether from a training job

# or uploaded)

RUN R -e "install.packages('carrier', dependencies = TRUE, repos = 'https://cloud.r-project.org/')"

# OPTIONAL: Install any additional R packages you may need for your model crate to run

RUN R -e "install.packages('<PACKAGE-NAME>', dependencies = TRUE, repos = 'https://cloud.r-project.org/')"

RUN R -e "install.packages('<PACKAGE-NAME>', dependencies = TRUE, repos = 'https://cloud.r-project.org/')"

# REQUIRED

ENTRYPOINT []

COPY ./start_plumber.R /tmp/start_plumber.R

CMD ["Rscript", "/tmp/start_plumber.R"]

Modify the file to add the packages you need for your scoring script.

plumber.R

Important

This section shows how to structure the plumber.R script. For detailed information about the plumber package, see plumber documentation .

The file plumber.R is the R script where you'll define the function for scoring. This script also performs tasks that are necessary to make your endpoint work. The script:

- Gets the path where the model is mounted from the

AZUREML_MODEL_DIRenvironment variable in the container. - Loads a model object created with the

cratefunction from thecarrierpackage, which was saved as crate.bin when it was packaged. - Unserializes the model object

- Defines the scoring function

Tip

Make sure that whatever your scoring function produces can be converted back to JSON. Some R objects are not easily converted.

# plumber.R

# This script will be deployed to a managed endpoint to do the model scoring

# REQUIRED

# When you deploy a model as an online endpoint, Azure Machine Learning mounts your model

# to your endpoint. Model mounting enables you to deploy new versions of the model without

# having to create a new Docker image.

model_dir <- Sys.getenv("AZUREML_MODEL_DIR")

# REQUIRED

# This reads the serialized model with its respecive predict/score method you

# registered. The loaded load_model object is a raw binary object.

load_model <- readRDS(paste0(model_dir, "/models/crate.bin"))

# REQUIRED

# You have to unserialize the load_model object to make it its function

scoring_function <- unserialize(load_model)

# REQUIRED

# << Readiness route vs. liveness route >>

# An HTTP server defines paths for both liveness and readiness. A liveness route is used to

# check whether the server is running. A readiness route is used to check whether the

# server's ready to do work. In machine learning inference, a server could respond 200 OK

# to a liveness request before loading a model. The server could respond 200 OK to a

# readiness request only after the model has been loaded into memory.

#* Liveness check

#* @get /live

function() {

"alive"

}

#* Readiness check

#* @get /ready

function() {

"ready"

}

# << The scoring function >>

# This is the function that is deployed as a web API that will score the model

# Make sure that whatever you are producing as a score can be converted

# to JSON to be sent back as the API response

# in the example here, forecast_horizon (the number of time units to forecast) is the input to scoring_function.

# the output is a tibble

# we are converting some of the output types so they work in JSON

#* @param forecast_horizon

#* @post /score

function(forecast_horizon) {

scoring_function(as.numeric(forecast_horizon)) |>

tibble::as_tibble() |>

dplyr::transmute(period = as.character(yr_wk),

dist = as.character(logmove),

forecast = .mean) |>

jsonlite::toJSON()

}

start_plumber.R

The file start_plumber.R is the R script that gets run when the container starts, and it calls your plumber.R script. Use the following script as-is.

entry_script_path <- paste0(Sys.getenv('AML_APP_ROOT'),'/', Sys.getenv('AZUREML_ENTRY_SCRIPT'))

pr <- plumber::plumb(entry_script_path)

args <- list(host = '0.0.0.0', port = 8000);

if (packageVersion('plumber') >= '1.0.0') {

pr$setDocs(TRUE)

} else {

args$swagger <- TRUE

}

do.call(pr$run, args)

Build container

These steps assume you have an Azure Container Registry associated with your workspace, which is created when you create your first custom environment. To see if you have a custom environment:

- Sign in to Azure Machine Learning studio.

- Select your workspace if necessary.

- On the left navigation, select Environments.

- On the top, select Custom environments.

- If you see custom environments, nothing more is needed.

- If you don't see any custom environments, create an R environment, or any other custom environment. (You won't use this environment for deployment, but you will use the container registry that is also created for you.)

Once you have verified that you have at least one custom environment, start a terminal and set up the CLI:

Open a terminal window and sign in to Azure. If you're using an Azure Machine Learning compute instance, use:

az login --identityIf you're not on the compute instance, omit

--identityand follow the prompt to open a browser window to authenticate.Make sure you have the most recent versions of the CLI and the

mlextension:az upgradeIf you have multiple Azure subscriptions, set the active subscription to the one you're using for your workspace. (You can skip this step if you only have access to a single subscription.) Replace

<YOUR_SUBSCRIPTION_NAME_OR_ID>with either your subscription name or subscription ID. Also remove the brackets<>.

### TESTED

# <az_extension_list>

az extension list

# </az_extension_list>

# <az_ml_install>

az extension add -n ml

# </az_ml_install>

# <list_defaults>

az configure -l -o table

# </list_defaults>

apt-get install sudo

# <az_extension_install_linux>

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

az extension add -n ml -y

# </az_extension_install_linux>

# <az_ml_update>

az extension update -n ml

# </az_ml_update>

# <az_ml_verify>

az ml -h

# </az_ml_verify>

# <az_extension_remove>

az extension remove -n azure-cli-ml

az extension remove -n ml

# </az_extension_remove>

# <git_clone>

git clone --depth 1 https://github.com/Azure/azureml-examples

cd azureml-examples/cli

# </git_clone>

# <az_version>

az version

# </az_version>

### UNTESTED

exit

# <az_account_set>

az account set -s "<YOUR_SUBSCRIPTION_NAME_OR_ID>"

# </az_account_set>

# <az_login>

az login

# </az_login>

Set the default workspace. If you're using a compute instance, you can keep the following command as is. If you're on any other computer, substitute your resource group and workspace name instead. (You can find these values in Azure Machine Learning studio.)

az configure --defaults group=$CI_RESOURCE_GROUP workspace=$CI_WORKSPACE

After you've set up the CLI, use the following steps to build a container.

Make sure you are in your project directory.

cd r-deploy-azuremlTo build the image in the cloud, execute the following bash commands in your terminal. Replace

<IMAGE-NAME>with the name you want to give the image.If your workspace is in a virtual network, see Enable Azure Container Registry (ACR) for additional steps to add

--image-build-computeto theaz acr buildcommand in the last line of this code.WORKSPACE=$(az config get --query "defaults[?name == 'workspace'].value" -o tsv) ACR_NAME=$(az ml workspace show -n $WORKSPACE --query container_registry -o tsv | cut -d'/' -f9-) IMAGE_TAG=${ACR_NAME}.azurecr.cn/<IMAGE-NAME> az acr build ./docker-context -t $IMAGE_TAG -r $ACR_NAME

Important

It will take a few minutes for the image to be built. Wait until the build process is complete before proceeding to the next section. Don't close this terminal, you'll use it next to create the deployment.

The az acr command will automatically upload your docker-context folder - that contains the artifacts to build the image - to the cloud where the image will be built and hosted in an Azure Container Registry.

Deploy model

In this section of the article, you'll define and create an endpoint and deployment to deploy the model and image built in the previous steps to a managed online endpoint.

An endpoint is an HTTPS endpoint that clients - such as an application - can call to receive the scoring output of a trained model. It provides:

- Authentication using "key & token" based auth

- SSL termination

- A stable scoring URI (endpoint-name.region.inference.ml.Azure.cn)

A deployment is a set of resources required for hosting the model that does the actual scoring. A single endpoint can contain multiple deployments. The load balancing capabilities of Azure Machine Learning managed endpoints allows you to give any percentage of traffic to each deployment. Traffic allocation can be used to do safe rollout blue/green deployments by balancing requests between different instances.

Create managed online endpoint

In your project directory, add the endpoint.yml file with the following code. Replace

<ENDPOINT-NAME>with the name you want to give your managed endpoint.$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineEndpoint.schema.json name: <ENDPOINT-NAME> auth_mode: aml_tokenUsing the same terminal where you built the image, execute the following CLI command to create an endpoint:

az ml online-endpoint create -f endpoint.ymlLeave the terminal open to continue using it in the next section.

Create deployment

To create your deployment, add the following code to the deployment.yml file.

Replace

<ENDPOINT-NAME>with the endpoint name you defined in the endpoint.yml fileReplace

<DEPLOYMENT-NAME>with the name you want to give the deploymentReplace

<MODEL-URI>with the registered model's URI in the form ofazureml:modelname@latestReplace

<IMAGE-TAG>with the value from:echo $IMAGE_TAG

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineDeployment.schema.json name: <DEPLOYMENT-NAME> endpoint_name: <ENDPOINT-NAME> code_configuration: code: ./src scoring_script: plumber.R model: <MODEL-URI> environment: image: <IMAGE-TAG> inference_config: liveness_route: port: 8000 path: /live readiness_route: port: 8000 path: /ready scoring_route: port: 8000 path: /score instance_type: Standard_DS2_v2 instance_count: 1Next, in your terminal execute the following CLI command to create the deployment (notice that you're setting 100% of the traffic to this model):

az ml online-deployment create -f deployment.yml --all-traffic --skip-script-validation

Note

It may take several minutes for the service to be deployed. Wait until deployment is finished before proceeding to the next section.

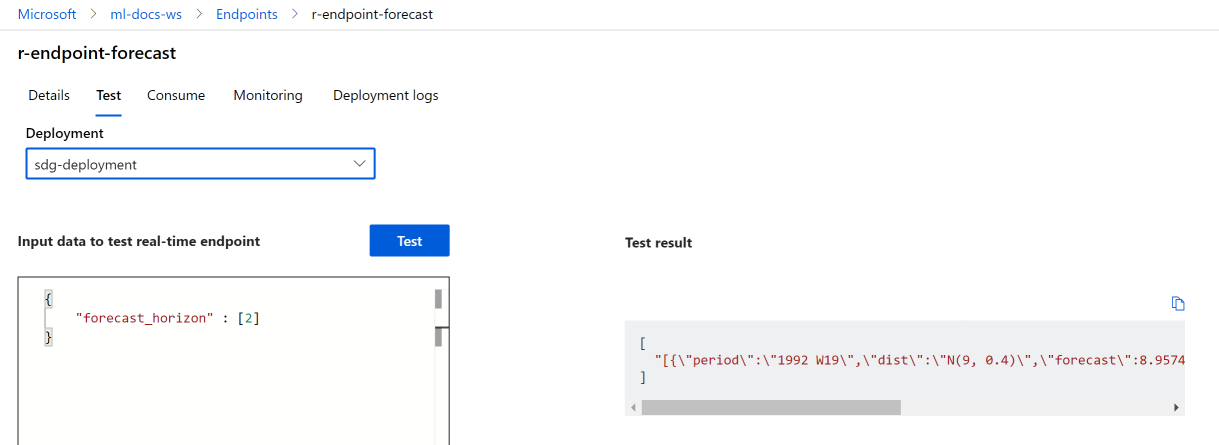

Test

Once your deployment has been successfully created, you can test the endpoint using studio or the CLI:

Navigate to the Azure Machine Learning studio and select from the left-hand menu Endpoints. Next, select the r-endpoint-iris you created earlier.

Enter the following json into the Input data to rest real-time endpoint textbox:

{

"forecast_horizon" : [2]

}

Select Test. You should see the following output:

Clean-up resources

Now that you've successfully scored with your endpoint, you can delete it so you don't incur ongoing cost:

az ml online-endpoint delete --name r-endpoint-forecast

Next steps

For more information about using R with Azure Machine Learning, see Overview of R capabilities in Azure Machine Learning