Deploy a model locally

Learn how to use Azure Machine Learning to deploy a model as a web service on your Azure Machine Learning compute instance. Use compute instances if one of the following conditions is true:

- You need to quickly deploy and validate your model.

- You are testing a model that is under development.

Tip

Deploying a model from a Jupyter Notebook on a compute instance, to a web service on the same VM is a local deployment. In this case, the 'local' computer is the compute instance.

Note

Azure Machine Learning Endpoints (v2) provide an improved, simpler deployment experience. Endpoints support both real-time and batch inference scenarios. Endpoints provide a unified interface to invoke and manage model deployments across compute types. See What are Azure Machine Learning endpoints?.

Prerequisites

- An Azure Machine Learning workspace with a compute instance running. For more information, see Quickstart: Get started with Azure Machine Learning.

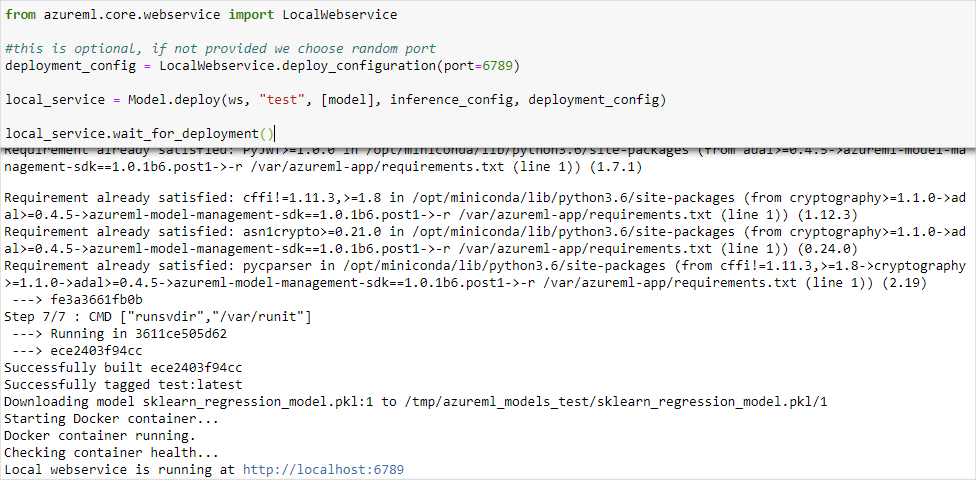

Deploy to the compute instances

An example notebook that demonstrates local deployments is included on your compute instance. Use the following steps to load the notebook and deploy the model as a web service on the VM:

From Azure Machine Learning studio, select "Notebooks", and then select how-to-use-azureml/deployment/deploy-to-local/register-model-deploy-local.ipynb under "Sample notebooks". Clone this notebook to your user folder.

Find the notebook cloned in step 1, choose or create a Compute Instance to run the notebook.

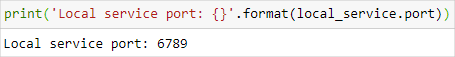

The notebook displays the URL and port that the service is running on. For example,

https://localhost:6789. You can also run the cell containingprint('Local service port: {}'.format(local_service.port))to display the port.

To test the service from a compute instance, use the

https://localhost:<local_service.port>URL. To test from a remote client, get the public URL of the service running on the compute instance. The public URL can be determined use the following formula;- Notebook VM:

https://<vm_name>-<local_service_port>.<azure_region_of_workspace>.notebooks.ml.azure.cn/score. - Compute instance:

https://<vm_name>-<local_service_port>.<azure_region_of_workspace>.instances.ml.azure.cn/score.

For example,

- Notebook VM:

https://vm-name-6789.chinaeast2.notebooks.ml.azure.cn/score - Compute instance:

https://vm-name-6789.chinaeast2.instances.ml.azure.cn/score

- Notebook VM:

Test the service

To submit sample data to the running service, use the following code. Replace the value of service_url with the URL of from the previous step:

Note

When authenticating to a deployment on the compute instance, the authentication is made using Azure Active Directory. The call to interactive_auth.get_authentication_header() in the example code authenticates you using AAD, and returns a header that can then be used to authenticate to the service on the compute instance. For more information, see Set up authentication for Azure Machine Learning resources and workflows.

When authenticating to a deployment on Azure Kubernetes Service or Azure Container Instances, a different authentication method is used. For more information on, see Configure authentication for Azure Machine models deployed as web services.

import requests

import json

from azureml.core.authentication import InteractiveLoginAuthentication

# Get a token to authenticate to the compute instance from remote

interactive_auth = InteractiveLoginAuthentication()

auth_header = interactive_auth.get_authentication_header()

# Create and submit a request using the auth header

headers = auth_header

# Add content type header

headers.update({'Content-Type':'application/json'})

# Sample data to send to the service

test_sample = json.dumps({'data': [

[1,2,3,4,5,6,7,8,9,10],

[10,9,8,7,6,5,4,3,2,1]

]})

test_sample = bytes(test_sample,encoding = 'utf8')

# Replace with the URL for your compute instance, as determined from the previous section

service_url = "https://vm-name-6789.chinaeast2.notebooks.ml.azure.cn/score"

# for a compute instance, the url would be https://vm-name-6789.chinaeast2.instances.ml.azure.cn/score

resp = requests.post(service_url, test_sample, headers=headers)

print("prediction:", resp.text)