September 2019

These features and Azure Databricks platform improvements were released in September 2019.

Note

The release date and content listed below only corresponds to actual deployment of the Azure Public Cloud in most case.

It provide the evolution history of Azure Databricks service on Azure Public Cloud for your reference that may not be suitable for Azure operated by 21Vianet.

Note

Releases are staged. Your Azure Databricks account may not be updated until up to a week after the initial release date.

Databricks Runtime 5.2 support ends

September 30, 2019

Support for Databricks Runtime 5.2 ended on September 30. See Databricks support lifecycles.

Launch pool-backed automated clusters that use Databricks Light (Public Preview)

September 26 - October 1, 2019: Version 3.3

When we introduced Pool configuration reference in July, you couldn't select Databricks Light as your runtime version when you configured a pool-backed cluster for an automated job. Now you can have both quick cluster start times and cost-efficient clusters!

Azure SQL Database gateway IP addresses will change on October 14, 2019

On October 14, Microsoft will migrate traffic to new gateways in these regions. If your workspace is in one of these regions and you have configured user-defined-routes (UDR) for the consolidated metastore from your own Azure Databricks virtual network (using "VNet injection"), you may need to update the IP address for the metastore when these IP addresses change. Consult the Azure SQL Database gateway IP addresses table for the latest list of IP addresses for your region.

Azure Data Lake Storage credential passthrough now supported on standard clusters and Scala (Public Preview)

September 12-17, 2019: Version 3.2

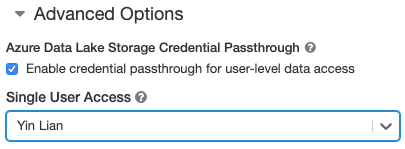

Credential passthrough can now be used with Python, SQL, and Scala on standard clusters running Databricks Runtime 5.5 and above, as well as SparkR on Databricks Runtime 6.0 Beta. Until now, credential passthrough required high concurrency clusters, which do not support Scala.

When a cluster is enabled for Azure Data Lake Storage credential passthrough, commands run on that cluster can read and write data in Azure Data Lake Storage without requiring users to configure service principal credentials to access the storage. The credentials are set automatically from the user initiating the action.

For security, only one user can run commands on a standard cluster that has Credential Passthrough enabled. The single user is set at creation time and can be edited by anyone with manage permissions on the cluster. Admins need to ensure that the single user has at least attach permission on the cluster.

pandas DataFrames now render in notebooks without scaling

September 12-17, 2019: Version 3.2

In Azure Databricks notebooks, displayHTML was scaling some framed HTML content to fit the available width of the rendered notebook. While this behavior is desirable for images, it rendered wide pandas DataFrames poorly. But not anymore!

Python version selector display now dynamic

September 12-17, 2019: Version 3.2

When you select a Databricks runtime that doesn't support Python 2 (like Databricks 6.0), the cluster creation page hides the Python version selector.

Databricks Runtime 6.0 Beta

September 12, 2019

Databricks Runtime 6.0 Beta brings many library upgrades and new features, including:

- New Scala and Java APIs for Delta Lake DML commands, as well as the vacuum and history utility commands.

- Enhanced DBFS FUSE v2 client for faster and more reliable reads and writes during model training.

- Support for multiple matplotlib plots per notebook cell.

- Update to Python 3.7, as well as updated numpy, pandas, matplotlib, and other libraries.

- Sunset of Python 2 support.

For more information, see the complete Databricks Runtime 6.0 (EoS) release notes.