Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Note

The release date and content listed below only corresponds to actual deployment of the Azure Public Cloud in most case.

It provide the evolution history of Azure Databricks service on Azure Public Cloud for your reference that may not be suitable for Azure operated by 21Vianet.

These features and Databricks platform improvements were released in June 2018.

RStudio integration

June 19, 2018: Version 2.74

Azure Databricks now integrates with RStudio Server, the popular IDE for R. With this powerful new integration, you can:

- Launch the RStudio UI directly from Azure Databricks.

- Import SparkR and sparklyr packages inside the RStudio IDE.

- Access, explore, and transform large datasets from RStudio IDE using Apache Spark.

- Execute and monitor Spark jobs on an Azure Databricks cluster.

- Manage your code using version control.

- Use either the Open Source or Pro editions of RStudio Server on Azure Databricks.

RStudio integration requires the Premium plan. You must install the integration on a high concurrency cluster. For details, see RStudio on Azure Databricks.

Cluster log purge

June 19, 2018: Version 2.74

By default, cluster logs are retained for 30 days. You can now delete them permanently and immediately by going to the Workspace Storage tab on the Admin Console. See Purge workspace storage.

New regions

June 7, 2018

Azure Databricks is now available in the following regions:

- East Australia

- Southeast Australia

- South UK

- West UK

Trash folder

June 7, 2018: Version 2.73

A new ![]() Trash folder contains all notebooks, libraries, and folders that you have deleted. The Trash folder is automatically purged after 30 days. You can restore a deleted object by dragging it out of the Trash folder into another folder.

Trash folder contains all notebooks, libraries, and folders that you have deleted. The Trash folder is automatically purged after 30 days. You can restore a deleted object by dragging it out of the Trash folder into another folder.

For details, see Delete an object.

Reduced log retention period

June 7, 2018: Version 2.73

Cluster logs are now retained for 30 days. They used to be retained indefinitely.

Gzipped API responses

June 7, 2018: Version 2.73

Requests sent with the Accept-Encoding: gzip header return gzipped responses.

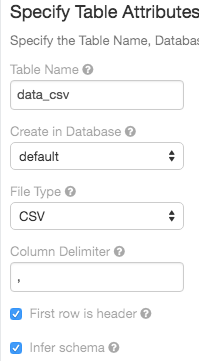

Table import UI

June 7, 2018: Version 2.73

The create table UI now supports an option to infer the schema of CSV files: