Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

You can use the Delete Activity in Azure Data Factory to delete files or folders from on-premises storage stores or cloud storage stores. Use this activity to clean up or archive files when they're no longer needed.

Warning

Deleted files or folders cannot be restored (unless the storage has soft-delete enabled). Be cautious when using the Delete activity to delete files or folders.

Best practices

Here are some recommendations for using the Delete activity:

Back up your files before deleting them with the Delete activity in case you need to restore them in the future.

Make sure that the service has write permissions to delete folders or files from the storage store.

Make sure you aren't deleting files that are being written at the same time.

If you want to delete files or folder from an on-premises system, make sure you're using a self-hosted integration runtime with a version greater than 3.14.

Supported data stores

- Azure Blob storage

- Azure Data Lake Storage Gen2

- Azure Files

- File System

- FTP

- SFTP

- Amazon S3

- Amazon S3 Compatible Storage

- Google Cloud Storage

- Oracle Cloud Storage

- HDFS

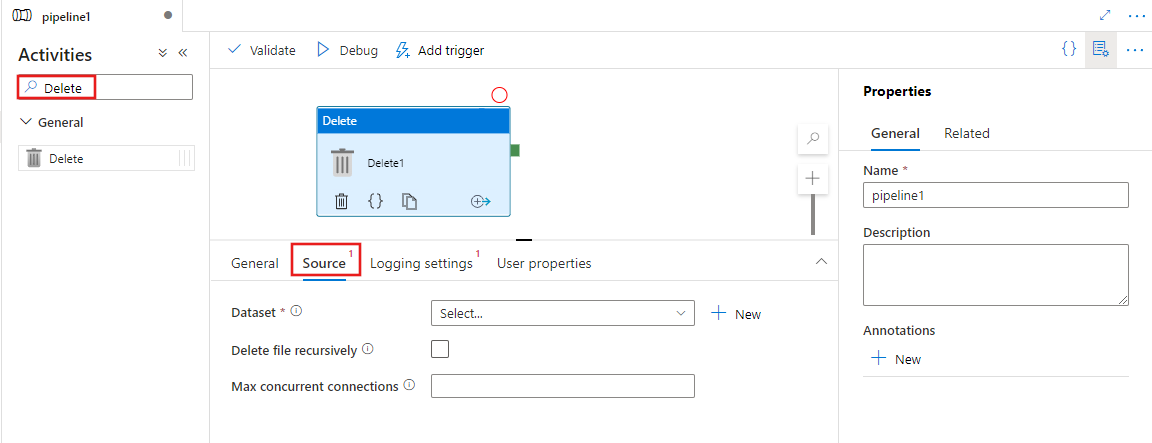

Create a Delete activity with UI

To use a Delete activity in a pipeline, complete the following steps:

Search for Delete in the pipeline Activities pane, and drag a Delete activity to the pipeline canvas.

Select the new Delete activity on the canvas if it isn't already selected, and its Source tab, to edit its details.

Select an existing or create a new Dataset specifying the files to be deleted. If multiple files are selected, optionally enable recursive deletion, which deletes data in any child folders as well. You can also specify a maximum number of concurrent connections for the operation.

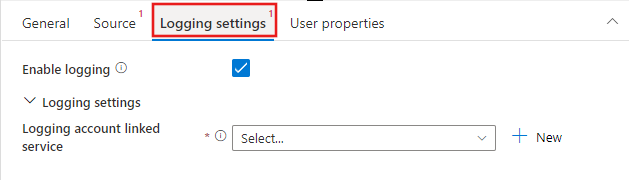

Optionally configure logging by selecting the Logging settings tab and selecting an existing or creating a new logging account linked service location to log results of the delete operations performed.

Syntax

{

"name": "DeleteActivity",

"type": "Delete",

"typeProperties": {

"dataset": {

"referenceName": "<dataset name>",

"type": "DatasetReference"

},

"storeSettings": {

"type": "<source type>",

"recursive": true/false,

"maxConcurrentConnections": <number>

},

"enableLogging": true/false,

"logStorageSettings": {

"linkedServiceName": {

"referenceName": "<name of linked service>",

"type": "LinkedServiceReference"

},

"path": "<path to save log file>"

}

}

}

Type properties

| Property | Description | Required |

|---|---|---|

| dataset | Provides the dataset reference to determine which files or folder to be deleted | Yes |

| recursive | Indicates whether the files are deleted recursively from the subfolders or only from the specified folder. | No. The default is false. |

| maxConcurrentConnections | The number of the connections to connect to storage store concurrently for deleting folder or files. | No. The default is 1. |

| enable logging | Indicates whether you need to record the deleted folder or file names. If true, you need to further provide a storage account to save the log file, so that you can track the behaviors of the Delete activity by reading the log file. | No |

| logStorageSettings | Only applicable when enablelogging = true. A group of storage properties that can be specified where you want to save the log file containing the folder or file names deleted by the Delete activity. |

No |

| linkedServiceName | Only applicable when enablelogging = true. The linked service of Azure Storage, or Azure Data Lake Storage Gen2 to store the log file that contains the folder or file names deleted by the Delete activity. Be aware it must be configured with the same type of Integration Runtime from the one used by delete activity to delete files. |

No |

| path | Only applicable when enablelogging = true. The path to save the log file in your storage account. If you don't provide a path, the service creates a container for you. |

No |

Monitoring

There are two places where you can see and monitor the results of the Delete activity:

- From the output of the Delete activity.

- From the log file.

Sample output of the Delete activity

{

"datasetName": "AmazonS3",

"type": "AmazonS3Object",

"prefix": "test",

"bucketName": "adf",

"recursive": true,

"isWildcardUsed": false,

"maxConcurrentConnections": 2,

"filesDeleted": 4,

"logPath": "https://sample.blob.core.chinacloudapi.cn/mycontainer/5c698705-a6e2-40bf-911e-e0a927de3f07",

"effectiveIntegrationRuntime": "MyAzureIR (China East 2)",

"executionDuration": 650

}

Sample log file of the Delete activity

| Name | Category | Status | Error |

|---|---|---|---|

| test1/yyy.json | File | Deleted | |

| test2/hello789.txt | File | Deleted | |

| test2/test3/hello000.txt | File | Deleted | |

| test2/test3/zzz.json | File | Deleted |

Examples of using the Delete activity

Delete specific folders or files

The store has the following folder structure:

Root/

Folder_A_1/

1.txt

2.txt

3.csv

Folder_A_2/

4.txt

5.csv

Folder_B_1/

6.txt

7.csv

Folder_B_2/

8.txt

Now you're using the Delete activity to delete folder or files by the combination of different property value from the dataset and the Delete activity:

| folderPath | fileName | recursive | Output |

|---|---|---|---|

| Root/ Folder_A_2 | NULL | False | Root/ Folder_A_1/ 1.txt 2.txt 3.csv Folder_A_2/ Folder_B_1/ 6.txt 7.csv Folder_B_2/ 8.txt |

| Root/ Folder_A_2 | NULL | True | Root/ Folder_A_1/ 1.txt 2.txt 3.csv |

| Root/ Folder_A_2 | *.txt | False | Root/ Folder_A_1/ 1.txt 2.txt 3.csv Folder_A_2/ 5.csv Folder_B_1/ 6.txt 7.csv Folder_B_2/ 8.txt |

| Root/ Folder_A_2 | *.txt | True | Root/ Folder_A_1/ 1.txt 2.txt 3.csv Folder_A_2/ 5.csv Folder_B_1/ 7.csv Folder_B_2/ |

Periodically clean up the time-partitioned folder or files

You can create a pipeline to periodically clean up the time partitioned folder or files. For example, the folder structure is similar as: /mycontainer/2018/12/14/*.csv. You can use the service system variable from schedule trigger to identify which folder or files should be deleted in each pipeline run.

Sample pipeline

{

"name":"cleanup_time_partitioned_folder",

"properties":{

"activities":[

{

"name":"DeleteOneFolder",

"type":"Delete",

"dependsOn":[

],

"policy":{

"timeout":"7.00:00:00",

"retry":0,

"retryIntervalInSeconds":30,

"secureOutput":false,

"secureInput":false

},

"userProperties":[

],

"typeProperties":{

"dataset":{

"referenceName":"PartitionedFolder",

"type":"DatasetReference",

"parameters":{

"TriggerTime":{

"value":"@formatDateTime(pipeline().parameters.TriggerTime, 'yyyy/MM/dd')",

"type":"Expression"

}

}

},

"logStorageSettings":{

"linkedServiceName":{

"referenceName":"BloblinkedService",

"type":"LinkedServiceReference"

},

"path":"mycontainer/log"

},

"enableLogging":true,

"storeSettings":{

"type":"AzureBlobStorageReadSettings",

"recursive":true

}

}

}

],

"parameters":{

"TriggerTime":{

"type":"string"

}

},

"annotations":[

]

}

}

Sample dataset

{

"name":"PartitionedFolder",

"properties":{

"linkedServiceName":{

"referenceName":"BloblinkedService",

"type":"LinkedServiceReference"

},

"parameters":{

"TriggerTime":{

"type":"string"

}

},

"annotations":[

],

"type":"Binary",

"typeProperties":{

"location":{

"type":"AzureBlobStorageLocation",

"folderPath":{

"value":"@dataset().TriggerTime",

"type":"Expression"

},

"container":{

"value":"mycontainer",

"type":"Expression"

}

}

}

}

}

Sample trigger

{

"name": "DailyTrigger",

"properties": {

"runtimeState": "Started",

"pipelines": [

{

"pipelineReference": {

"referenceName": "cleanup_time_partitioned_folder",

"type": "PipelineReference"

},

"parameters": {

"TriggerTime": "@trigger().scheduledTime"

}

}

],

"type": "ScheduleTrigger",

"typeProperties": {

"recurrence": {

"frequency": "Day",

"interval": 1,

"startTime": "2018-12-13T00:00:00.000Z",

"timeZone": "UTC",

"schedule": {

"minutes": [

59

],

"hours": [

23

]

}

}

}

}

}

Clean up the expired files that were last modified before 2018.1.1

You can create a pipeline to clean up the old or expired files by using file attribute filter: "LastModified" in dataset.

Sample pipeline

{

"name":"CleanupExpiredFiles",

"properties":{

"activities":[

{

"name":"DeleteFilebyLastModified",

"type":"Delete",

"dependsOn":[

],

"policy":{

"timeout":"7.00:00:00",

"retry":0,

"retryIntervalInSeconds":30,

"secureOutput":false,

"secureInput":false

},

"userProperties":[

],

"typeProperties":{

"dataset":{

"referenceName":"BlobFilesLastModifiedBefore201811",

"type":"DatasetReference"

},

"logStorageSettings":{

"linkedServiceName":{

"referenceName":"BloblinkedService",

"type":"LinkedServiceReference"

},

"path":"mycontainer/log"

},

"enableLogging":true,

"storeSettings":{

"type":"AzureBlobStorageReadSettings",

"recursive":true,

"modifiedDatetimeEnd":"2018-01-01T00:00:00.000Z"

}

}

}

],

"annotations":[

]

}

}

Sample dataset

{

"name":"BlobFilesLastModifiedBefore201811",

"properties":{

"linkedServiceName":{

"referenceName":"BloblinkedService",

"type":"LinkedServiceReference"

},

"annotations":[

],

"type":"Binary",

"typeProperties":{

"location":{

"type":"AzureBlobStorageLocation",

"fileName":"*",

"folderPath":"mydirectory",

"container":"mycontainer"

}

}

}

}

Move files by chaining the Copy activity and the Delete activity

You can move a file by using a Copy activity to copy a file and then a Delete activity to delete a file in a pipeline. When you want to move multiple files, you can use the GetMetadata activity + Filter activity + Foreach activity + Copy activity + Delete activity as in the following sample.

Note

If you want to move the entire folder by defining a dataset containing a folder path only, and then using a Copy activity and a Delete activity to reference to the same dataset representing a folder, you need to be very careful. You must ensure that there will not be any new files arriving into the folder between the copy operation and the delete operation. If new files arrive in the folder at the moment when your copy activity just completed the copy job but the Delete activity has not been started, then the Delete activity might delete the newly arriving file which has NOT been copied to the destination yet by deleting the entire folder.

Sample pipeline

{

"name":"MoveFiles",

"properties":{

"activities":[

{

"name":"GetFileList",

"type":"GetMetadata",

"dependsOn":[

],

"policy":{

"timeout":"7.00:00:00",

"retry":0,

"retryIntervalInSeconds":30,

"secureOutput":false,

"secureInput":false

},

"userProperties":[

],

"typeProperties":{

"dataset":{

"referenceName":"OneSourceFolder",

"type":"DatasetReference",

"parameters":{

"Container":{

"value":"@pipeline().parameters.SourceStore_Location",

"type":"Expression"

},

"Directory":{

"value":"@pipeline().parameters.SourceStore_Directory",

"type":"Expression"

}

}

},

"fieldList":[

"childItems"

],

"storeSettings":{

"type":"AzureBlobStorageReadSettings",

"recursive":true

},

"formatSettings":{

"type":"BinaryReadSettings"

}

}

},

{

"name":"FilterFiles",

"type":"Filter",

"dependsOn":[

{

"activity":"GetFileList",

"dependencyConditions":[

"Succeeded"

]

}

],

"userProperties":[

],

"typeProperties":{

"items":{

"value":"@activity('GetFileList').output.childItems",

"type":"Expression"

},

"condition":{

"value":"@equals(item().type, 'File')",

"type":"Expression"

}

}

},

{

"name":"ForEachFile",

"type":"ForEach",

"dependsOn":[

{

"activity":"FilterFiles",

"dependencyConditions":[

"Succeeded"

]

}

],

"userProperties":[

],

"typeProperties":{

"items":{

"value":"@activity('FilterFiles').output.value",

"type":"Expression"

},

"batchCount":20,

"activities":[

{

"name":"CopyAFile",

"type":"Copy",

"dependsOn":[

],

"policy":{

"timeout":"7.00:00:00",

"retry":0,

"retryIntervalInSeconds":30,

"secureOutput":false,

"secureInput":false

},

"userProperties":[

],

"typeProperties":{

"source":{

"type":"BinarySource",

"storeSettings":{

"type":"AzureBlobStorageReadSettings",

"recursive":false,

"deleteFilesAfterCompletion":false

},

"formatSettings":{

"type":"BinaryReadSettings"

},

"recursive":false

},

"sink":{

"type":"BinarySink",

"storeSettings":{

"type":"AzureBlobStorageWriteSettings"

}

},

"enableStaging":false,

"dataIntegrationUnits":0

},

"inputs":[

{

"referenceName":"OneSourceFile",

"type":"DatasetReference",

"parameters":{

"Container":{

"value":"@pipeline().parameters.SourceStore_Location",

"type":"Expression"

},

"Directory":{

"value":"@pipeline().parameters.SourceStore_Directory",

"type":"Expression"

},

"filename":{

"value":"@item().name",

"type":"Expression"

}

}

}

],

"outputs":[

{

"referenceName":"OneDestinationFile",

"type":"DatasetReference",

"parameters":{

"Container":{

"value":"@pipeline().parameters.DestinationStore_Location",

"type":"Expression"

},

"Directory":{

"value":"@pipeline().parameters.DestinationStore_Directory",

"type":"Expression"

},

"filename":{

"value":"@item().name",

"type":"Expression"

}

}

}

]

},

{

"name":"DeleteAFile",

"type":"Delete",

"dependsOn":[

{

"activity":"CopyAFile",

"dependencyConditions":[

"Succeeded"

]

}

],

"policy":{

"timeout":"7.00:00:00",

"retry":0,

"retryIntervalInSeconds":30,

"secureOutput":false,

"secureInput":false

},

"userProperties":[

],

"typeProperties":{

"dataset":{

"referenceName":"OneSourceFile",

"type":"DatasetReference",

"parameters":{

"Container":{

"value":"@pipeline().parameters.SourceStore_Location",

"type":"Expression"

},

"Directory":{

"value":"@pipeline().parameters.SourceStore_Directory",

"type":"Expression"

},

"filename":{

"value":"@item().name",

"type":"Expression"

}

}

},

"logStorageSettings":{

"linkedServiceName":{

"referenceName":"BloblinkedService",

"type":"LinkedServiceReference"

},

"path":"container/log"

},

"enableLogging":true,

"storeSettings":{

"type":"AzureBlobStorageReadSettings",

"recursive":true

}

}

}

]

}

}

],

"parameters":{

"SourceStore_Location":{

"type":"String"

},

"SourceStore_Directory":{

"type":"String"

},

"DestinationStore_Location":{

"type":"String"

},

"DestinationStore_Directory":{

"type":"String"

}

},

"annotations":[

]

}

}

Sample datasets

Dataset used by GetMetadata activity to enumerate the file list.

{

"name":"OneSourceFolder",

"properties":{

"linkedServiceName":{

"referenceName":"AzureStorageLinkedService",

"type":"LinkedServiceReference"

},

"parameters":{

"Container":{

"type":"String"

},

"Directory":{

"type":"String"

}

},

"annotations":[

],

"type":"Binary",

"typeProperties":{

"location":{

"type":"AzureBlobStorageLocation",

"folderPath":{

"value":"@{dataset().Directory}",

"type":"Expression"

},

"container":{

"value":"@{dataset().Container}",

"type":"Expression"

}

}

}

}

}

Dataset for data source used by copy activity and the Delete activity.

{

"name":"OneSourceFile",

"properties":{

"linkedServiceName":{

"referenceName":"AzureStorageLinkedService",

"type":"LinkedServiceReference"

},

"parameters":{

"Container":{

"type":"String"

},

"Directory":{

"type":"String"

},

"filename":{

"type":"string"

}

},

"annotations":[

],

"type":"Binary",

"typeProperties":{

"location":{

"type":"AzureBlobStorageLocation",

"fileName":{

"value":"@dataset().filename",

"type":"Expression"

},

"folderPath":{

"value":"@{dataset().Directory}",

"type":"Expression"

},

"container":{

"value":"@{dataset().Container}",

"type":"Expression"

}

}

}

}

}

Dataset for data destination used by copy activity.

{

"name":"OneDestinationFile",

"properties":{

"linkedServiceName":{

"referenceName":"AzureStorageLinkedService",

"type":"LinkedServiceReference"

},

"parameters":{

"Container":{

"type":"String"

},

"Directory":{

"type":"String"

},

"filename":{

"type":"string"

}

},

"annotations":[

],

"type":"Binary",

"typeProperties":{

"location":{

"type":"AzureBlobStorageLocation",

"fileName":{

"value":"@dataset().filename",

"type":"Expression"

},

"folderPath":{

"value":"@{dataset().Directory}",

"type":"Expression"

},

"container":{

"value":"@{dataset().Container}",

"type":"Expression"

}

}

}

}

}

You can also get the template to move files from here.

Known limitations

Delete activity doesn't support deleting list of folders described by wildcard.

When using file attribute filter in delete activity: modifiedDatetimeStart and modifiedDatetimeEnd to select files to be deleted, make sure to set "wildcardFileName": "*" in delete activity as well.

Related content

Learn more about moving files in Azure Data Factory and Synapse pipelines.