Use question answering to answer questions

APPLIES TO: SDK v4

The question answering feature of Azure Cognitive Service for Language provides cloud-based natural language processing (NLP) that allows you to create a natural conversational layer over your data. It's used to find the most appropriate answer for any input from your custom knowledge base of information.

This article describes how to use the question answering feature in your bot.

Prerequisites

- If you don't have an Azure subscription, create a trial subscription before you begin.

- A language resource in Language Studio, with the custom question answering feature enabled.

- A copy of the Custom Question Answering sample in C# or JavaScript.

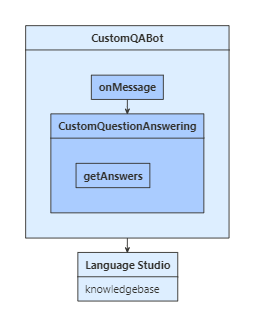

About this sample

To use question answering in your bot, you need an existing knowledge base. Your bot then can use the knowledge base to answer the user's questions.

If you need to create a new knowledge base for a Bot Framework SDK bot, see the README for the custom question answering sample.

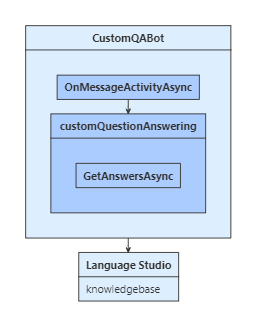

OnMessageActivityAsync is called for each user input received. When called, it accesses configuration settings from the sample code's appsetting.json file and connects to your knowledge base.

The user's input is sent to your knowledge base and the best returned answer is displayed back to your user.

Get your knowledge base connection settings

In Language Studio, open your language resource.

Copy the following information to your bot's configuration file:

- The host name for your language endpoint.

- The

Ocp-Apim-Subscription-Key, which is your endpoint key. - The project name, which acts as your knowledge base ID.

Your host name is the part of the endpoint URL between https:// and /language, for example, https://<hostname>/language. Your bot needs the project name, host URL, and endpoint key to connect to your knowledge base.

Tip

If you aren't deploying this for production, you can leave your bot's app ID and password fields blank.

Set up and call the knowledge base client

Create your knowledge base client, then use the client to retrieve answers from the knowledge base.

Be sure that the Microsoft.Bot.Builder.AI.QnA NuGet package is installed for your project.

In QnABot.cs, in the OnMessageActivityAsync method, create a knowledge base client. Use the turn context to query the knowledge base.

Bots/CustomQABot.cs

using var httpClient = _httpClientFactory.CreateClient();

var customQuestionAnswering = CreateCustomQuestionAnsweringClient(httpClient);

// Call Custom Question Answering service to get a response.

_logger.LogInformation("Calling Custom Question Answering");

var options = new QnAMakerOptions { Top = 1, EnablePreciseAnswer = _enablePreciseAnswer };

var response = await customQuestionAnswering.GetAnswersAsync(turnContext, options);

Test the bot

Run the sample locally on your machine. If you haven't done so already, install the Bot Framework Emulator. For further instructions, refer to the sample's README (C# or JavaScript).

Start the Emulator, connect to your bot, and send messages to your bot. The responses to your questions will vary, based on the information your knowledge base.

Additional information

The Custom Question Answering, all features sample (C# or JavaScript) shows how to use a QnA Maker dialog to support a knowledge base's follow-up prompt and active learning features.

- Question answering supports follow-up prompts, also known as multi-turn prompts. If the knowledge base requires more information from the user, the service sends context information that you can use to prompt the user. This information is also used to make any follow-up calls to the service.

- Question answering also supports active learning suggestions, allowing the knowledge base to improve over time. The QnA Maker dialog supports explicit feedback for the active learning feature.